TL;DR

AlignMind is a LLM-powered multi-agent system that transforms requirements refinement from brief, surface-level exchanges into deep, meaningful conversations. By incorporating Theory-of-Mind capabilities and a specialized multi-agent architecture, AlignMind achieves 8x higher lexical richness in requirements compared to baseline approaches, maintains longer conversations (13 vs 4 rounds), and produces significantly higher quality outputs as validated by LLM judges. The system demonstrates that sophisticated requirements refinement is achievable through AI-human collaboration.

This work is conducted in collaboration with Keheliya Gallaba, Ali Arabat, Mohhamed Sayagh, and Ahmed Hassan. For more details about our methodology, implementation, and complete experimental results, please read the full paper: Towards Conversational Development Environments Using Theory-of-Mind and Multi-Agent Architectures for Requirements Refinement.

The Requirements Refinement Gap in the Agentic Era

Foundation Models (FMs) rush to solutions. Research shows that in over 60% of cases requiring clarification, FMs generate code rather than asking necessary questions. This premature solutioning leads to:

- Misaligned functionality

- Costly rework

- Project failures

Consider a user requesting “a system that can quickly search through my documents.” Critical ambiguities remain:

- Document types (text, images, mixed?)

- Search criteria (keywords, metadata, full-text?)

- Performance expectations (milliseconds, seconds?)

Current FMs fail to explore these nuances, compromising solution quality.

The AlignMind Approach: Four Key Pillars

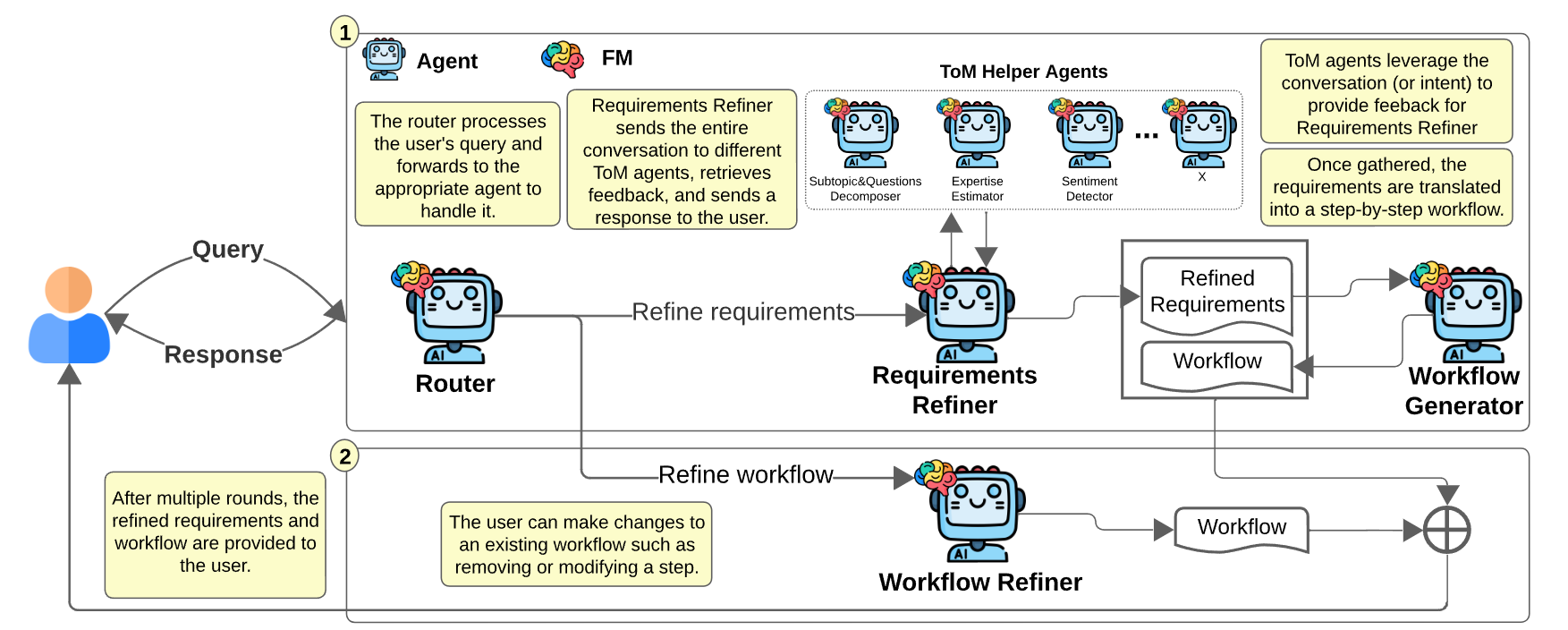

1. Multi-Agent Architecture

Instead of overloading a single FM with complex, multi-objective prompts, AlignMind decomposes tasks across specialized agents:

- Router Agent: Directs queries to appropriate handlers

- Requirement Refiner Agent: Conducts iterative clarification dialogues

- Workflow Generator Agent: Translates requirements into actionable steps

- Workflow Refiner Agent: Enables post-generation modifications

- ToM Helper Agents: Provide cognitive insights about user state

2. Theory-of-Mind Integration

AlignMind incorporates ToM helpers that infer:

- User expertise level (Novice/Intermediate/Expert)

- Emotional state (Positive/Neutral/Negative sentiment)

- Intent decomposition into manageable subtopics

This enables contextually appropriate responses that align with user mental states.

3. Iterative Improvement

The system maintains persistent state across conversations, supporting:

- Multi-round refinement (median 13 rounds vs 4 baseline)

- Progressive requirement elaboration

- Real-time workflow adjustments

4. Intent Decomposition

Complex problems are broken into subtopics with targeted questions. For a weather app request, subtopics include:

- App User Needs and Goals

- Core Features

- Weather Data Sources and APIs

- Technology Stack

- Deployment Platforms

Each subtopic generates up to 5 clarifying questions, ensuring comprehensive coverage.

How AlignMind Works

The refinement process follows this flow:

- User submits initial query → Router Agent analyzes intent

- Topics & Questions Decomposer generates subtopics and questions

- Requirement Refiner engages in iterative dialogue, informed by ToM helpers

- After sufficient clarification, requirements are summarized

- Workflow Generator creates step-by-step natural language plan

- User can refine workflow through Workflow Refiner

The system uses two strategies to determine topic coverage:

- Self-check for sufficient question-answer pairs

- Hard cutoff at 5 questions per subtopic

Evaluation Results

Output Quality

FM Judge Panel Evaluation (150 scenarios, 3 judges):

- AlignMind median score: 10/10

- Baseline median score: 9.08/10

- 81.33% scenarios showed improvement

- Statistically significant across all judges (p < 0.001)

Requirements Richness:

- AlignMind: 266.5 median content words

- Baseline: 33 median content words

- 8x improvement in lexical richness

Conversation Depth:

- AlignMind: 13 median rounds

- Baseline: 4 median rounds

- Enables thorough exploration vs premature termination

Grounding and Hallucination

- Perfect consistency scores (5/5) for majority of cases

- No statistical difference in hallucination between approaches

- Requirements remain grounded in user conversations

Real-World Insights from User Testing

Six software engineers (4-20 years experience) tested AlignMind:

Positive Feedback:

- “Helps you reflect on and reason about requirements”

- “I wouldn’t have created such a detailed list myself”

- “Promising in assisting users to refine complex goals”

Identified Challenges:

- Surface-level “cookie-cutter” requirements

- Repetitive questions

- Templated conversation flow

These insights drove architectural improvements, particularly the ToM integration.

Implications for Future Development

Beyond Software Domain

AlignMind’s approach extends to:

- Healthcare: Translating clinical requirements

- Finance: Articulating compliance needs

- Automotive: Clarifying safety specifications

Plug-and-Play Architecture

Organizations can develop custom ToM modules tailored to their domains, enabling industry-specific requirement refinement while maintaining the core framework.

The Multi-Modal Future

Requirements engineering inherently involves multiple modalities – verbal discussions, diagrams, documents. Future work should integrate multi-modal foundation models to capture this richness.

Conclusion

AlignMind demonstrates that meaningful requirements refinement is achievable through AI-human collaboration. By combining Theory-of-Mind capabilities with specialized agents, the system transforms brief exchanges into rich, comprehensive requirement specifications. While computational costs are higher, the 8x improvement in requirement richness and significant quality gains justify the investment.

This work lays the groundwork for intent-first development environments where AI collaborators deeply understand and co-create software aligned with true stakeholder intentions.