TL;DR

Watson is a cognitive observability framework that reveals the hidden reasoning processes of fast-thinking LLM-powered agents without affecting their behavior. By using a “surrogate agent” that mirrors the primary agent and generates reasoning traces verified through prompt attribution, Watson enables developers to debug and improve agent performance. In our experiments, Watson improved agent performance on MMLU by 7.58% (13.45% relative) and AutoCodeRover on SWE-bench-lite by 7.76% (12.31% relative) without any model updates or architectural changes.

This work is done in collaboration with Benjamin Rombaut, Sogol Masoumzadeh, Kirill Vasilevski, and Ahmed Hassan. For more details about our methodology, experimental setup, and future directions, please read the full paper: Watson: A Cognitive Observability Framework for the Reasoning of LLM-Powered Agents.

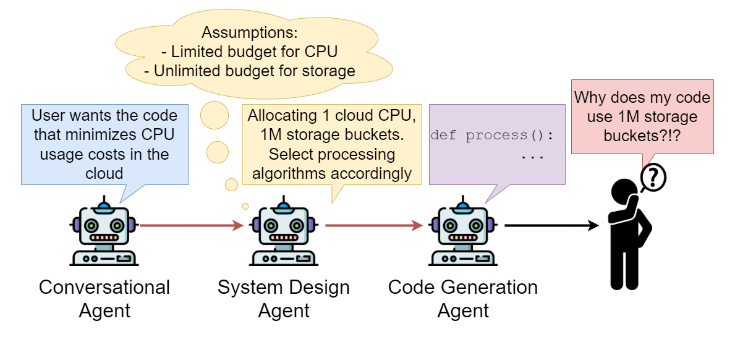

The Challenge: Debugging Black-Box AI Agents

As foundation models power increasingly complex software systems, we face a critical observability gap. While recent Large Reasoning Models (LRMs) like OpenAI’s o1 generate explicit reasoning, they come with significant latency and cost penalties. In many real-world scenarios—like code auto-completion or real-time customer support—we need fast-thinking LLMs that operate with opaque, implicit reasoning.

Traditional software observability relies on logs, traces, and performance counters that directly link to deterministic code execution. But AI agents make decisions through prompts and learned behaviors that:

- Cannot be instrumented like traditional code

- Don’t produce deterministic or reproducible results

- Lack clear traceability between inputs and decisions

This opacity makes it extremely difficult to debug when agents behave unexpectedly or make errors.

Introducing Cognitive Observability

We propose expanding observability for AI-powered software (Agentware) beyond operational metrics to include cognitive observability—the ability to observe not just what agents do, but why they do it.

Cognitive observability encompasses three critical sub-categories:

- Semantic feedback – User and system responses to agent outputs

- Output integrity – Sentiment analysis and hallucination detection

- Reasoning trace – Understanding the thought process behind decisions

Watson focuses on the third category: observing reasoning traces of fast-thinking LLMs without altering their behavior.

How Watson Works

Watson operates through a parallel “surrogate agent” that mirrors the primary agent’s configuration and attempts to reconstruct its reasoning process:

1. Mirroring Configuration

The surrogate agent maintains identical:

- LLM architecture

- Decoding parameters (temperature, top_p, etc.)

- Input processing capabilities

2. Generating Reasoning Paths

Watson uses two techniques to bridge the gap between input prompts and agent outputs:

Fill-in-the-Middle (FIM): For models that support it, Watson arranges the prompt as prefix-suffix-middle, using the primary agent’s input as prefix and output as suffix, then generates the reasoning that connects them.

Repetitive Chain-of-Thought (RepCoT): For models without FIM support, Watson prompts the surrogate agent to generate chain-of-thought reasoning, discarding any that don’t align with the primary agent’s output.

3. Verification Through Prompt Attribution

To ensure generated reasoning reflects the primary agent’s actual thought process, Watson:

- Generates multiple potential reasoning paths

- Validates each using PromptExp, which calculates how different prompt components influenced the output

- Confirms that factors mentioned in reasoning traces actually impacted the primary agent’s decision

- Summarizes verified reasoning traces into a “meta-reasoning”

Real-World Impact

We evaluated Watson on two challenging scenarios:

MMLU Benchmark Results

Testing on the Massive Multitask Language Understanding benchmark:

- Performance improvement: 7.58 percentage points (13.45% relative) for FIM version

- Key finding: Hint-assisted agents with Pass@1 (73.8%) matched or exceeded standalone agents at Pass@3 (73.8%), showing hints provide more useful information than multiple attempts

AutoCodeRover on SWE-bench-lite

For autonomous code repair tasks:

- Performance improvement: 7.76 percentage points (12.31% relative) at Pass@1

- Impact: Solved 8 additional issues from 38 incorrect but valid attempts

- Success rate: Increased from 63.11% to 70.87% on valid issues

Example: Debugging Legal Reasoning

In one MMLU professional law question about merchant offers, Watson revealed why an agent incorrectly chose option B (“Is valid for three months”) instead of the correct option A:

The generated reasoning showed the agent:

- Correctly identified the question’s focus on merchant offers

- Evaluated each option’s relevance

- Mistakenly prioritized the “validity period” characteristic

- Dismissed other options as not directly addressing the question

This transparency allowed us to create targeted hints that corrected the agent’s understanding, leading to the right answer.

Applications and Benefits

Watson enables:

For Developers:

- Debug agent failures by understanding reasoning pathways

- Refine prompts and agent interactions based on actual thought processes

- Identify patterns in agent errors across different domains

For Multi-Agent Systems:

- Agents can observe and correct each other’s reasoning

- Cross-checking between agents improves overall system performance

- Errors from early agents that manifest later can be traced back

For Production Systems:

- Runtime correction without model retraining

- Automated hint generation for improved performance

- Continuous improvement through reasoning observation

Conclusion

Watson demonstrates that we can achieve transparency in fast-thinking AI agents without sacrificing their speed or altering their behavior. By recovering and verifying implicit reasoning traces, we enable both human developers and autonomous agents to understand, debug, and improve AI decision-making.

The significant performance improvements—over 7% on both MMLU and code repair tasks—show that cognitive observability isn’t just about understanding agents; it’s about making them better. As AI agents become more prevalent in critical systems, frameworks like Watson will be essential for building trustworthy, debuggable, and continuously improving AI software.