TL;DR

PromptExp is a framework that reveals which tokens in your prompts most influence LLM outputs by providing multi-granularity explanations (token, word, sentence, or component level). We developed two approaches: aggregation-based (using techniques like Integrated Gradients) and perturbation-based (measuring impact of token masking). In our experiments, the perturbation-based approach using semantic similarity performed best, with over 80% of developers finding the explanations accurate and useful for tasks like debugging misclassifications and compressing prompts to save costs.

This work is conducted in collaboration with Ximing Dong, Shaowei Wang, Gopi Krishnan Rajbahadur, and Ahmed Hassan. For more details about our methodology, implementation, and complete experimental results, please read the full paper: PromptExp: Multi-granularity Prompt Explanation of Large Language Models.

The Black-Box Problem of LLM Prompts

Large Language Models excel at tasks from text generation to question answering, but their black-box nature makes it difficult to understand why they produce certain outputs. When a prompt doesn’t work as expected, developers often resort to trial-and-error modifications without understanding which parts of their prompt actually influence the model’s behavior.

Existing explanation techniques for deep learning models were designed for single-output tasks like classification. LLMs, however, generate sequences of tokens, making these techniques not directly applicable. While recent approaches like Chain-of-Thought prompting can provide natural language explanations, they’re prone to hallucinations and don’t tell you which prompt components are actually important.

Introducing PromptExp

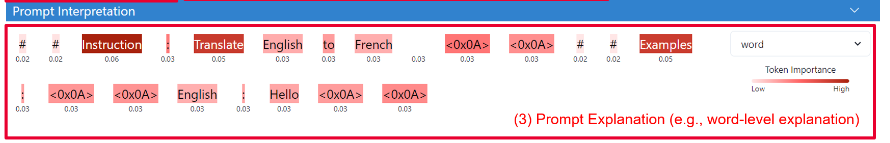

PromptExp assigns importance scores to each component of a prompt at multiple levels of granularity by aggregating token-level explanations. This flexibility helps developers understand and refine complex prompts with multiple components like few-shot examples or instructions.

Two Approaches to Token-Level Explanation

1. Aggregation-based Approach

Since LLMs generate tokens sequentially, we aggregate importance scores across all rounds of token generation:

Stage 1: Calculate token importance using existing techniques (we use Integrated Gradients) for each round of generation.

Stage 2: Aggregate scores across rounds using the formula:

imp(pi) = Σ(k=1 to M) wk * normalization(mik)Where:

mikis the importance score for tokeniat roundkwkis the weight at roundkMis the number of sampled rounds

We propose two weighting schemes:

- Equal weighting (AggEqu): All rounds weighted equally (wk = 1/M), sampling evenly across the sequence

- Confidence weighting (AggConf): Rounds weighted by output token confidence

For a prompt generating m output tokens, we create an (n+m)×m importance matrix, then aggregate each prompt token’s scores across all generation rounds.

2. Perturbation-based Approach

We systematically mask each token and observe output changes:

- Generate baseline output with original prompt

- Mask one token to create perturbed prompt

- Evaluate impact using three techniques:

- PerbLog: Compare logits between original and perturbed outputs using:

imp(pj) = 1/m Σ(oi∈OutputBase) [LogSoftmax(logit(Vi,P)[oi]) - LogSoftmax(logit(Vi,Pj)[oi])] - PerbSim: Measure semantic similarity using SentenceBERT embeddings:

imp(pj) = 1 - sim(embed(Outputj), embed(OutputBase)) - PerbDis: Count overlapping tokens (simplest but least accurate):

imp(pj) = 1 - |OutputBase ∩ Outputj| / |Outputj|

- PerbLog: Compare logits between original and perturbed outputs using:

- Repeat for each token

Multi-granularity Flexibility

PromptExp extends beyond tokens to higher granularities by summing importance scores. Users can define custom components like [instruction], [example1], [example2] and see which parts matter most:

imp(Cj) = Σ(pk∈Cj) imp(pk)Where Cj is a user-defined component containing tokens {p1, p2, ..., pz}. This allows developers to analyze importance at the level that matches their mental model of the prompt structure.

Evaluation Results

We evaluated PromptExp on sentiment classification tasks using GPT-3.5 and Llama-2:

Effectiveness (RQ1)

PerbSim consistently outperformed all other approaches:

- For sentiment classification: 68% flip rate when perturbing important tokens vs 29% for unimportant tokens (Llama-2)

- For suffix impact analysis: Showed highest correlation between suffix importance and output length reduction

PerbDis performed worst due to only measuring token overlap, while PerbLog suffered on GPT-3.5 due to limited logit access (top 20 tokens only).

Parameter Impact (RQ2)

- Vocabulary access (K): More logit access improved PerbLog effectiveness

- Sampling rounds (M): Increasing rounds helped AggEqu but not AggConf (top confidence rounds suffice)

Real-World Validation: User Study

We validated PromptExp with 10 industry developers across three tasks:

Explanation Quality: 84% of cases rated as reasonable/accurate (avg: 4.2/5)

- Example: Correctly identified “AC” and “five” as most important in “What does AC stand for? Explain like I am five”

Understanding Misclassifications: 65% found explanations helpful (avg: 3.82/5)

- Example: Revealed model overweighted “fun movie” while ignoring “I do not like it” in sentiment misclassification

Prompt Compression: 80% successfully compressed prompts (avg: 4.2/5)

- Developers removed low-importance phrases to reduce costs while maintaining functionality

Practical Insights

Performance Considerations

- Aggregation-based: O(M × X) complexity, parallelizable to O(X)

- Perturbation-based: O(n × I) complexity, parallelizable with batch inference

- Example: 50-token prompt takes ~7 seconds vs 4.74 seconds pure inference

Choosing the Right Approach

- For accuracy: Use PerbSim (best overall performance)

- For efficiency with model access: Use AggConf (less overhead, strong effectiveness)

- For safety-constrained environments: Use PerbSim or PerbDis (no logit access needed)

- For closed-source models: Use perturbation-based approaches (black-box compatible)

Conclusion

PromptExp provides a practical solution for understanding how different parts of prompts influence LLM behavior. By offering both white-box and black-box approaches at multiple granularities, it helps developers move beyond trial-and-error prompt engineering to make informed decisions about prompt design and optimization.

The strong performance of PerbSim across different models and tasks, combined with positive developer feedback, demonstrates PromptExp’s potential to enhance LLM interpretability and reduce development costs through better prompt understanding.